10.08.2023

(109) Artificial Intelligence Is Booming— So Is Its Carbon Footprint

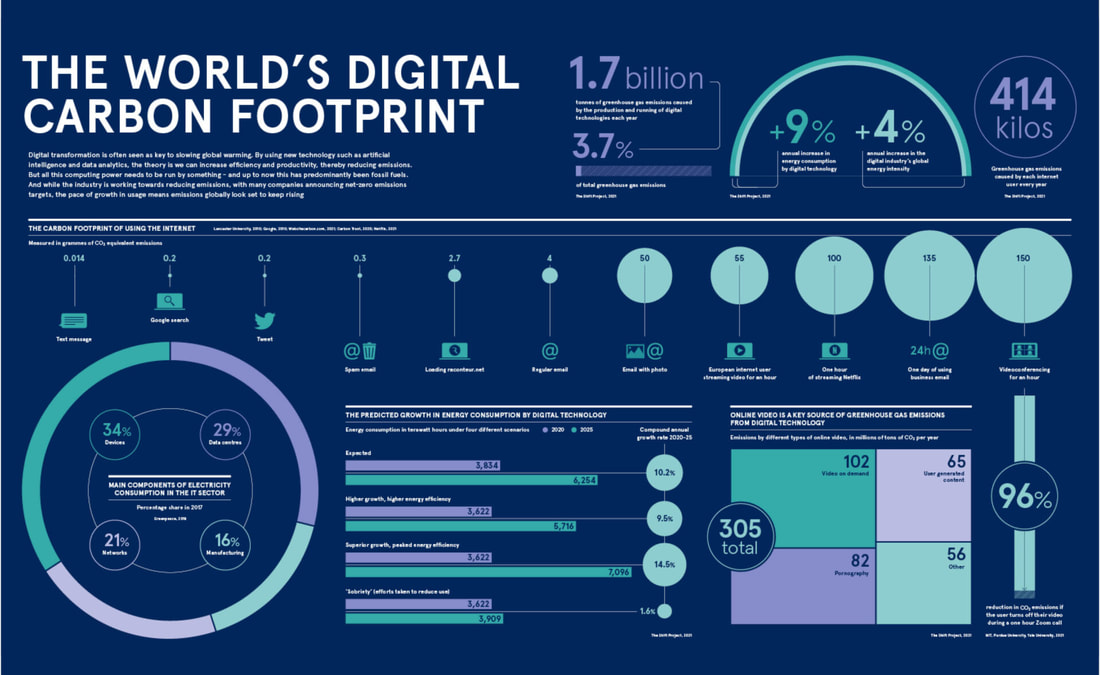

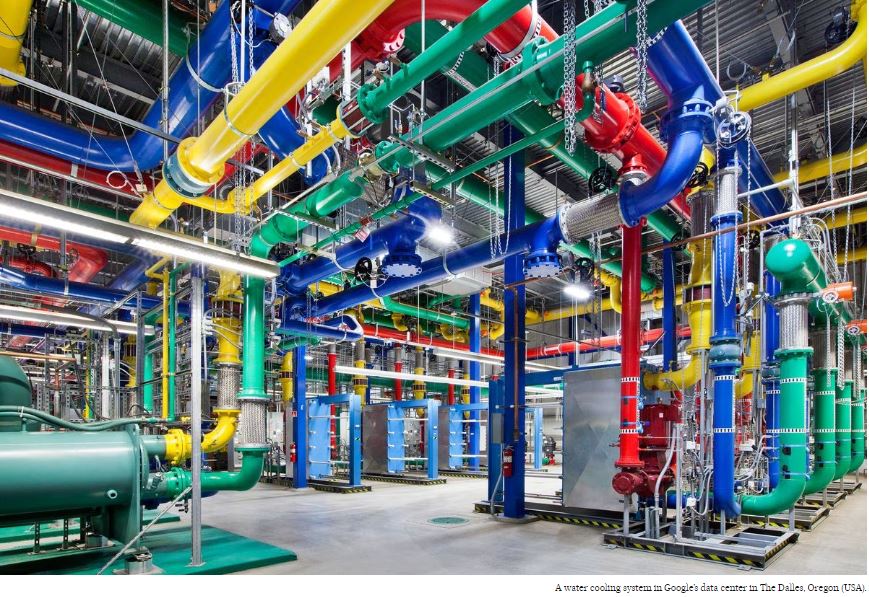

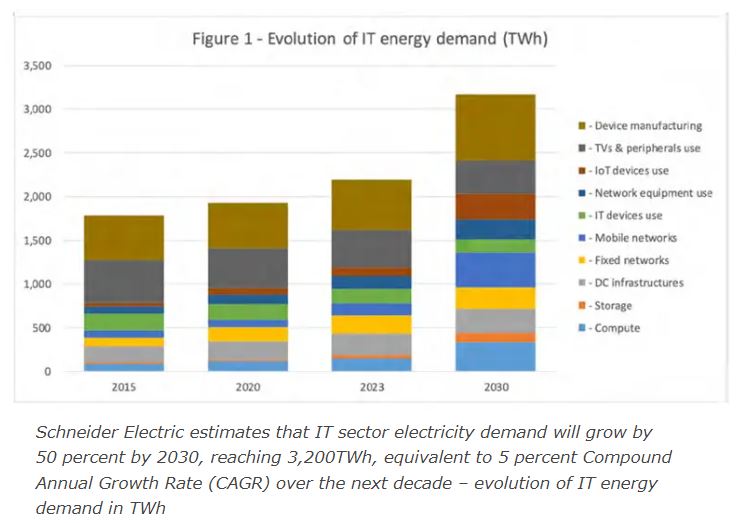

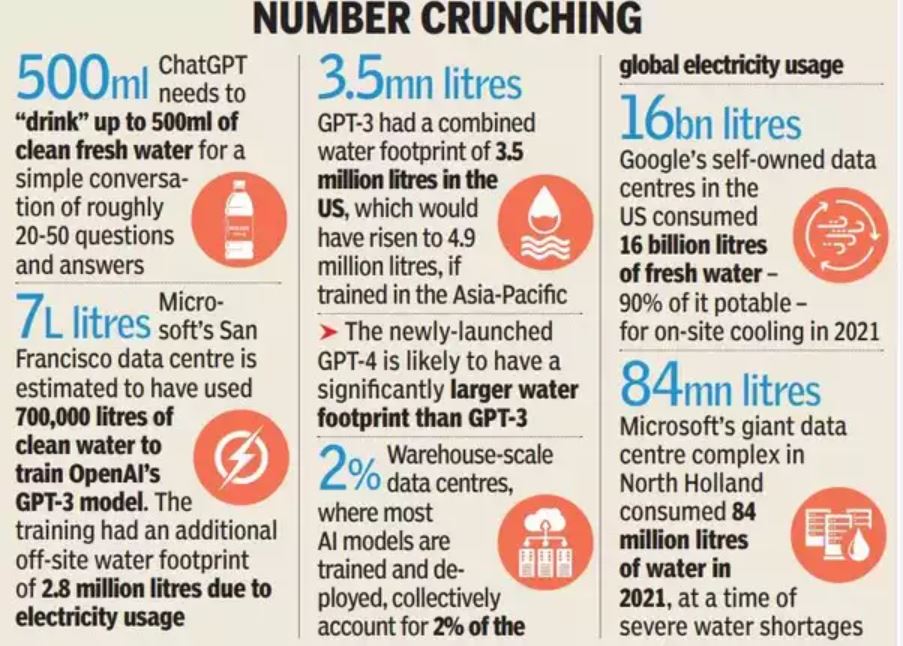

Microsoft, Alphabet’s Google and ChatGPT maker OpenAI use cloud computing that relies on thousands of chips inside servers in massive data centers across the globe to train AI algorithms called models, analyzing data to help them "learn” to perform tasks. AI uses more energy than other forms of computing, and training a single model can gobble up more electricity than 100 U.S. homes use in an entire year. Yet the sector is growing so fast — and has such limited transparency — that no one knows exactly how much total electricity use and carbon emissions can be attributed to AI. The emissions could also vary widely depending on what type of power plants provide that electricity. A data center that draws its electricity from a coal or natural gas-fired plant, for instance, will be responsible for much higher emissions than one that draws power from solar or wind farms. Most data centers use graphics processing units — or GPUs — to train AI models and those components are among the most power hungry the chip industry makes. Graphics processing units (GPUs), specialized electronic circuits, are typically used because they can execute many calculations or processes simultaneously; they also consume more power than many other kinds of chips. Today data centers run 24/7 and most derive their energy from fossil fuels, although there are increasing efforts to use renewable energy resources. Because of the energy the world’s data centers consume, they account for 3.5 to 4.7 percent of global greenhouse gas emissions, exceeding even those of the aviation industry. Credit: Bloomberg Quicktake

Poverty deprives people of adequate education, health care and of life's most basic necessities- safe living conditions (including clean air and clean drinking water) and an adequate food supply. The developed (industrialized) countries today account for roughly 20 percent of the world's population but control about 80 percent of the world's wealth.

Poverty and pollution seem to operate in a vicious cycle that, so far, has been hard to break. Even in the developed nations, the gap between the rich and the poor is evident in their respective social and environmental conditions.

Poverty and pollution seem to operate in a vicious cycle that, so far, has been hard to break. Even in the developed nations, the gap between the rich and the poor is evident in their respective social and environmental conditions.